Modern machine learning systems move data through many layers: online services, log streams, storage systems, and batch jobs. Each part needs numbers that stay clean, fields that stay organized, and records that stay compact. When the prediction quality must be tracked for every model version, the setup must be simple enough to maintain and strong enough to measure errors correctly.

This is where MSE Avro becomes useful. MSE gives you a straight way to see how far predictions drift from real values. Avro gives you a safe way to store those prediction events across many systems without losing structure.

Teams that send millions of prediction events per day need a format that stays small, easy to process, and friendly for updates. Avro fits that need. And teams that need a stable error number need MSE. When both work together, your ML system becomes easier to track, easier to debug, and easier to maintain at scale.

This guide explains both topics in a clean, simple way and shows how they fit inside real pipelines used in production.

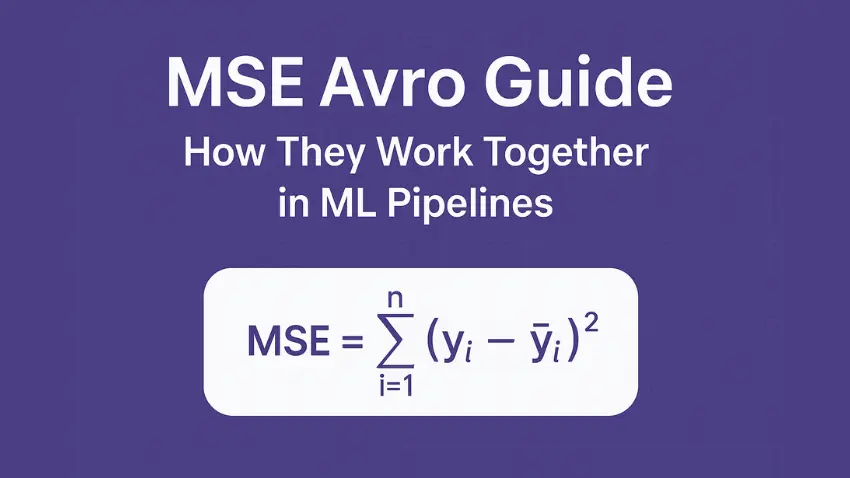

What MSE (Mean Squared Error) Measures?

MSE is a number that shows how far your predictions are from actual values. Think of each prediction as a small comparison:

- Real value

- Predicted value

The difference between them gets squared. All squared values get added. Then the sum gets divided by the number of samples. That final number is the MSE.

A low MSE means the model predictions stay close to real values. A high MSE means the model predictions move far away from real values.

MSE works smoothly for regression tasks because each error contributes a clean numeric amount.

The MSE Formula

Variables Explained

- n — total samples

- yᵢ — real value

- ŷᵢ — predicted value

- yᵢ − ŷᵢ — difference between real and predicted

- (yᵢ − ŷᵢ)² — squared difference

Why MSE Uses Squared Differences

Squared differences help in three ways:

- Negative and positive errors do not cancel each other.

- Bigger mistakes get a higher weight.

- Training algorithms get smooth numeric behavior when updating model weights.

Because of these traits, MSE is common in training loops, tuning, and early testing.

When MSE Works Best (Regression, Continuous Variables)

MSE fits best when:

- You predict continuous numeric values

- The error pattern is balanced

- Outlier handling is important

- The system cares about big mistakes

Models for pricing, load forecasting, demand planning, scoring, rating, and similar tasks rely on MSE at some point.

MSE vs MAE vs RMSE — Quick Comparison Table

| Metric | Measures | Outlier Sensitivity | Units | Typical Uses |

| MSE | Squared error | High | Squared target units | Training, tuning, metric logs |

| RMSE | Square root of MSE | High | Same as target | Reports for leaders |

| MAE | Absolute error | Lower | Same as target | Dashboards with noisy data |

Quick notes:

- Use MSE during training.

- Use RMSE for simple communication with leaders.

- Use MAE when error numbers jump randomly.

What Apache Avro Is (Schema-Driven Serialization)

Apache Avro is a data format that stores records in a compact binary form. Every Avro record follows a schema. The schema describes:

- field names

- field types

- default values

- allowed formats

Both producers and consumers use the same schema. This makes every prediction record predictable and easy to read in any tool or service.

Also Read: What’s New in the Latest uStudioBytes Release Version? Full Feature Breakdown

Key Features: Compact, Fast, Binary Format

Avro is widely used because it:

- keeps file size small

- supports many programming languages

- attaches schema information

- handles millions of events safely

- fits well in Kafka topics, object storage, or streaming systems

Its small size is helpful for ML systems that send prediction logs continuously.

Avro vs JSON vs Parquet — Technical Comparison

| Format | Best For | Strengths | Weak Spots |

| Avro | Row-based events | Compact, schema included, simple changes | Not ideal for large column-based scans |

| JSON | APIs and debugging | Easy to read manually | Heavy size, slow for ML pipelines |

| Parquet | Warehouse analytics | Small size, fast scans | Not ideal for single-row messaging |

- Avro fits prediction streams.

- Parquet fits analytics in data lakes.

- JSON fits debugging and small web calls.

How Avro Handles Schema Evolution

Avro allows safe updates such as:

- Adding fields with default values

- renaming fields with aliases

- changing numeric types when safe

- marking optional fields with unions

Because the schema travels with the data, older readers do not break when new fields appear.

Why MSE and Avro Work Perfectly Together

Using MSE Avro in the same workflow helps the system record and measure prediction quality reliably. Avro holds predictions, actual values, timestamps, model versions, squared errors, and slice information. MSE numbers can be calculated later from these records.

Together they provide:

- small event size

- stable field structure

- replayable logs

- good support for late-arriving labels

- clear tracking of model versions

ML teams with many services benefit a lot from this combination.

Designing an Avro Schema for ML Predictions and Metrics

Essential Fields for Prediction Data

A standard prediction record includes:

- prediction — numeric value

- actual — numeric value (may come later)

- squared_error — error value if known

- model_version — helps separate old and new model runs

- timestamp — time of prediction

Fields like prediction_id, featureset_version, and slice keys can be added if needed.

Versioning Predictions with Avro

Model versioning helps:

- track performance

- detect regressions

- Compare older models to newer ones

- Review errors per version

Every record carries a model_version field so the pipeline stays organized.

Best Practices for Schema Longevity

To keep an Avro schema safe for years:

- Use logical timestamp types

- Add defaults to new fields

- Mark the late-arriving fields as optional

- Set aliases when renaming

- document every field

- store schemas in a registry

- avoid sudden changes

A stable schema avoids breaking pipelines.

MSE Calculation Techniques That Fit Avro Pipelines

Real-Time MSE Calculation (Stream Processing)

When real values arrive quickly:

- compute squared error on each prediction

- push numeric results into a metrics topic

- track rolling windows

- set alerts based on model_version

This works well for low-latency systems.

Batch MSE Calculation (Data Lake / Warehouse)

When labels arrive later:

- prediction logs stored in Avro files

- labels stored in a separate dataset

- Batch tool joins them by prediction_id

- MSE, RMSE, and MAE calculated

- results stored again in Avro metrics files

This workflow handles millions of rows smoothly.

Aggregation Patterns: Daily, Weekly, Monthly Metrics

Teams group metrics by:

- hour

- day

- week

- region

- user type

- device

- model version

This helps leaders track long-term model behavior.

Minimizing Compute Cost While Handling Large Avro Files

Tips:

- partition by model_version and date

- compress with snappy or deflate

- skip unused columns

- Keep the record size small

- push heavy math to batch jobs

With these steps, large Avro datasets stay manageable.

What Is the Network Switching Subsystem? Full Guide with MSC & HLR

A Complete ML Pipeline Example Using Avro + MSE Step-by-Step Flow

1. Model generates predictions

A prediction event is created with prediction_id, prediction value, and model_version.

2. Predictions stored in Avro

The application sends Avro-encoded records to Kafka or object storage.

3. Avro ingested into Spark / Beam / Flink

These tools read Avro files through built-in connectors.

4. MSE computed

Spark or Beam calculates MSE from actual and predicted values.

5. Results pushed to dashboards

Metrics appear in Superset, Looker, or Metabase for easy reading.

Sample Avro Record Example

For json

{

“prediction_id”: “p-10488”,

“model_version”: “v4.1.0”,

“prediction”: 28.7,

“actual”: 30.1,

“squared_error”: 1.96,

“timestamp”: 1738102210

}

Example Code Snippet (Python/Scala)

Python Example

from fastavro import reader

with open(“predictions.avro”, “rb”) as f:

rows = list(reader(f))

total = 0

count = 0

for r in rows:

if r[“actual”] is not None:

diff = r[“actual”] – r[“prediction”]

total += diff * diff

count += 1

mse = total / count if count > 0 else None

print(“MSE:”, mse)

Reporting MSE to Stakeholders

MSE Output Format Leaders Understand

Leaders prefer:

- RMSE since it shares target units

- volume counts

- date windows

- slice-level numbers

- short notes

This gives them easy, clear signals.

Example Weekly or Monthly Review Table (Readable Format)

| Model Version | Window | Count | MSE | RMSE | MAE | Notes |

| v3.2.1 | 2025-07-28 to 2025-08-03 | 1,254,310 | 6.41 | 2.53 | 1.98 | Stable |

| v3.2.1 | 2025-08-04 to 2025-08-10 | 1,301,772 | 7.2 | 2.68 | 2.04 | Delay in label arrival |

| v3.3.0 | 2025-08-11 to 2025-08-17 | 1,415,006 | 5.95 | 2.44 | 1.91 | Better tail behavior |

Common Pitfalls and How to Avoid Them

Pitfall 1: Using JSON Instead of Avro — Higher Storage + Slower Compute

- JSON creates large messages and slows processing.

- Avro keeps data smaller and faster.

Pitfall 2: Schema Evolution Mistakes

- Changes without defaults break readers.

- Always use safe updates.

Pitfall 3: Storing Text Instead of Numeric Values

- Numbers stored as strings slow down metric jobs.

- Keep numeric fields numeric.

Pitfall 4: Using the Wrong Metric (MSE for Classification)

- MSE does not fit the classification output.

- Use classification metrics for those cases.

Pitfall 5: Missing Timestamps and Model Versions

- Without clear timestamps, events cannot be sorted.

- Without model_version, metrics mix across versions.

Real-World Use Cases Where MSE + Avro Shine

Model Performance Tracking at Scale

- Large ML systems generate millions of prediction logs.

- Avro keeps them small enough to store and fast enough to read.

Feature Store Integration

- Feature stores stream features and predictions.

- Avro transfers these rows in a clean, compact format.

Streaming ML Predictions

Avro messages in Kafka support quick updates when predictions arrive nonstop.

Offline Batch Validation

Nightly or weekly validation jobs use Avro records stored in object storage to compute MSE, RMSE, and MAE.

Final Notes — Why MSE + Avro Helps ML Teams Long Term

Combining MSE with Avro gives ML teams a safe, stable way to record predictions and evaluate model behavior across many services. MSE offers a simple numeric signal of prediction drift. Avro ensures every record stays structured and compact even when the schema changes over time.

This pairing works with Spark, Beam, Flink, Kafka, object storage, and most languages used in ML engineering. It supports both real-time error checks and large batch evaluations, and it scales from small systems to very large ones with the same structure.

Teams that use MSE Avro in their pipeline gain clear prediction logs, cleaner schema control, manageable storage size, and safer updates for later versions.

Frequently Asked Questions

- What is MSE used for in machine learning?

It shows how far predictions are from real values in regression tasks.

- Why is MSE preferred over MAE in many pipelines?

Squared errors help training tools update model weights smoothly.

- How is Avro better for ML data storage than JSON or CSV?

Avro is smaller, faster, and carries the schema.

- Can I use Avro for storing true labels and predictions?

Yes, Avro fits row-based ML events.

- How does Avro handle schema changes over time?

Through defaults, aliases, and safe updates.

- Can Avro store very large ML datasets?

Yes, Avro files scale through partitions in object storage.

- Can MSE be visualized for model performance improvement?

Yes, tools like Looker, Superset, or Metabase can display MSE, RMSE, and MAE.

- Does Avro work with Spark, Hadoop, Flink, and Beam?

Yes, Avro is supported in many distributed systems.

- Can MSE be computed directly inside a pipeline using Avro files?

Yes, both streaming and batch tools can compute it.

- Is MSE the best metric for regression problems?

MSE is widely used, but RMSE and MAE can also support clear reports.